Imagined envirometns

My inspiration for this project mostly stemmed from David Lynch, and his dream-like \ somewhat hypnotic “Black Lodge”, sometimes referred to as the “waiting room”.

Thematically I took ideas from here such as the ‘backwards speak’ from Micael J. Anderson’s character. For example, musically I used Angelo Badalamenti’s piece “Laura Palmer’s theme,” also from twin peaks and played it in reverse .

For the visual output I was inspired by Terry Gilliam and his work as part of the infamous Monty Python group.

Something about his slight surrealist use of the human anatomy, and giving it new context made me want to work within the confines of myself as the subject of my work.

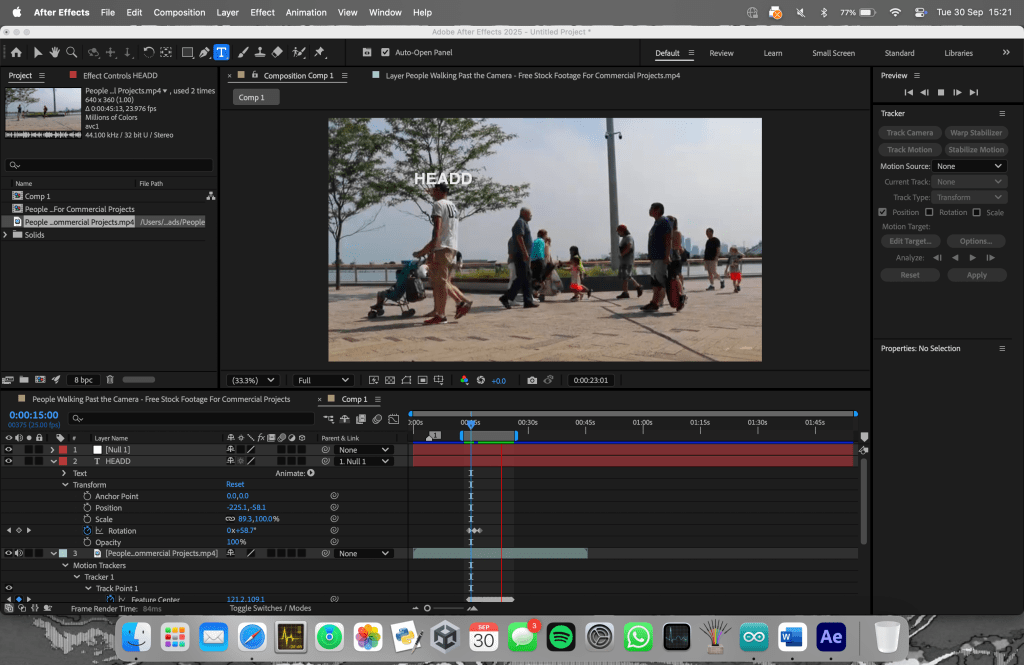

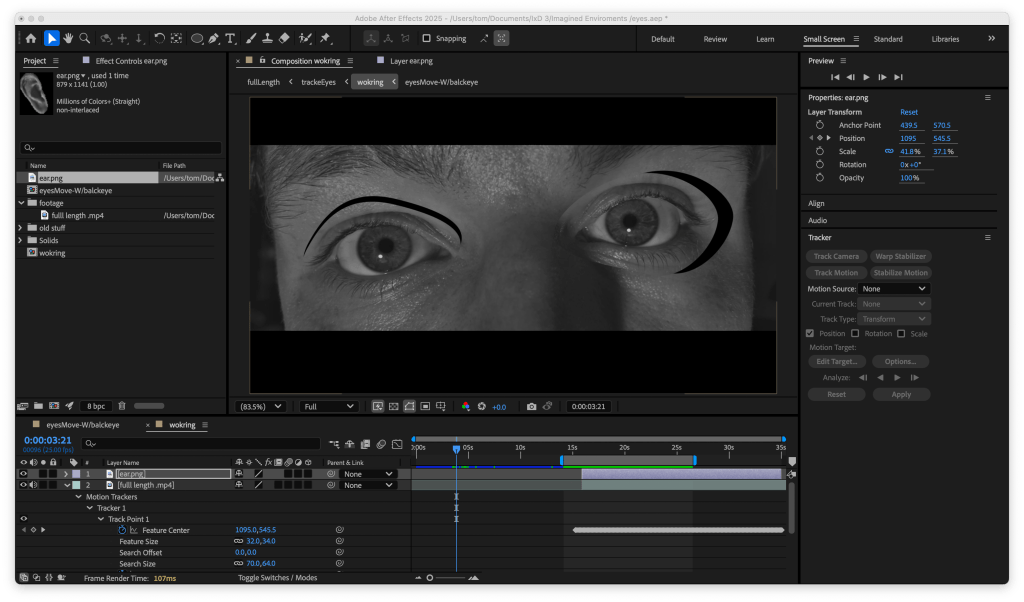

After messing about with point tracking that we learned in our first workshop if felt I was ready to shoot some of my own footage…

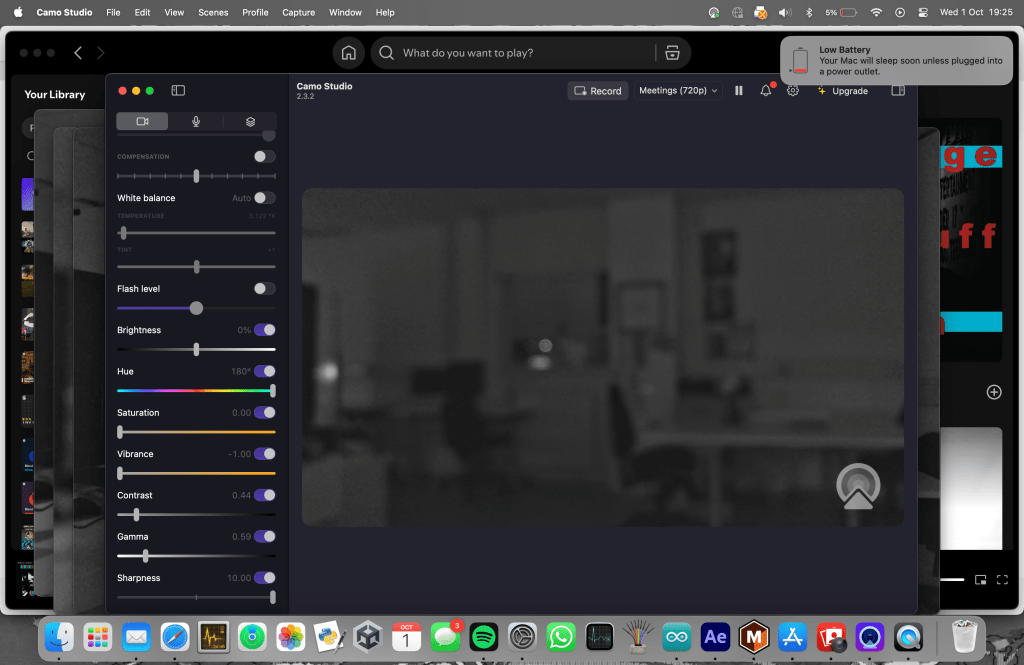

Here I am using an application called “camo studio”. usually for connecting your iPhone to your Mac for high quality web cam input to use in video calls etc. I used it to be able to monitor and control my camera. This way I could remotely control the cameras zoom, focus, exposure, etc. while the phone is mounted as to not ruin the shot.

I used this setup to record high quality close ups of my face, including my eyes, ears, mouth and nose – not all of which I used.

I also decided to film at a lower frame rate around 15 – 20fps to give it a slightly in-humane look, not smooth enough to be humane movements. This was especially noticeable when it came to the footage of my mouth, it gave the shot a twitchy unsettling felling that I was going for.

I tracked eyes and mouth scenes and used them to affect the position of the objects themselves as well as the cropping or framing of the video as was the case with the mouth.

Around the 00:45 mark you will notice the music stops and its changes to a strange synthesised sound. One I want to continue to unsettle the viewer but to do so I needed to create this sound.

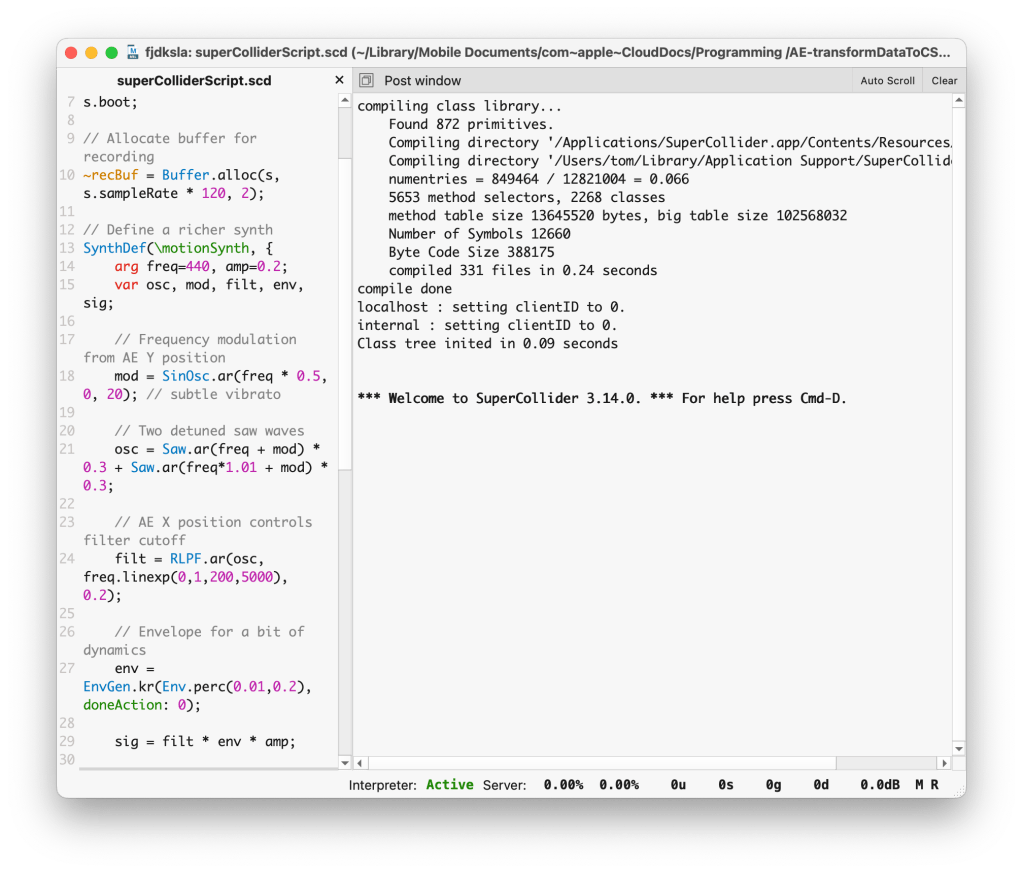

I did this by tracking the pupil of the eye and exporting the data into a .txt I then cleaned it up with a processing sketch and fed it into an application called supercollider.

This allowed me to create synths and other electronic noise, or “music” at a very low level. With script I was able to feed a very simple oscillator the data of the x, y, position of the track that affect the pitch of the OSC.

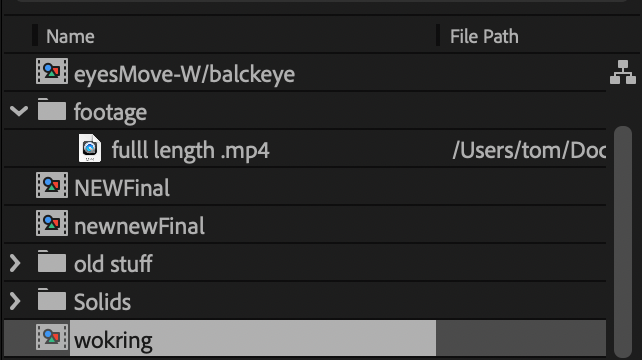

Here are some slightly scary – although not as bad as it got later down the line screenshots from development.

“which one is the final version?”

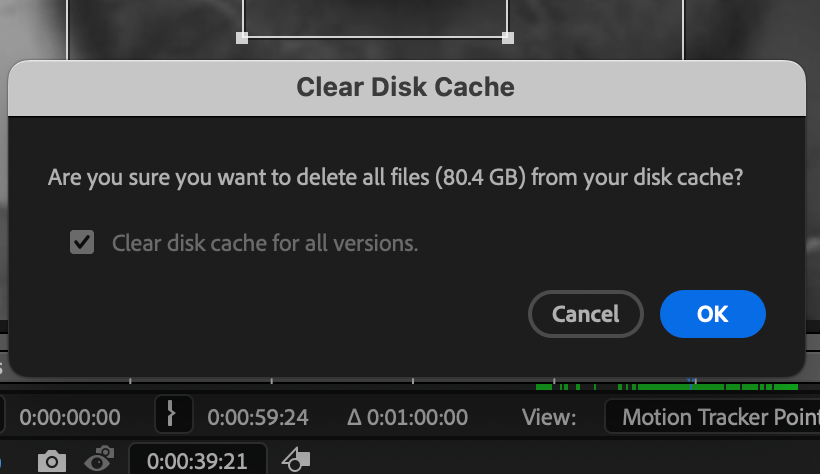

No wonder I couldn’t load my emails…

Final version (I promise!)

I understand the inherent cliché in what I am about to say however, In conclusion! I really enjoyed this project, although disappointed at first that we have to produce our own footage I actually felt very happy with my final outcome. I am not known to enjoy working inside adobe software but AE is an exception, and although I don’t always totally know how I managed to do what I did whenever I do anything, at least I did it.